by Max Marchi and Jim Albert (2014, CRC Press)

The Sabermetric bookshelf, #3

Here we have the perfect book for anyone who stumbles across this blog--the intersection of R and baseball data. The open source statistical programming environment of R is a great tool for anyone analyzing baseball data, from the robust analytic functions to the great visualization packages. The particular readership niche might be small, but as both R and interest in sabermetrics expand, it's a natural fit.

And one would be hard pressed to find better qualified authors, writers who have feet firmly planted in both worlds. Max Marchi is a writer for Baseball Prospectus, and it's clear from the ggplot2 charts in his blog entries (such as this entry on left-handed catchers) that he's an avid R user.

Jim Albert is a Professor in the Department of Mathematics and Statistics at Bowling Green State University; three of his previous books sit on my bookshelf. Curve Ball, written with Jay Bennett, is pure sabermetrics, and one of the best books ever written on the topic (and winner of SABR's Baseball Research Award in 2002). Albert's two R-focussed books, the introductory R by Example (co-authored with Maria Rizzo) and the more advanced Bayesian Computation with R, are intended as supplementary texts for students learning statistical methods. Both employ plenty of baseball examples in their explanations of statistical analysis using R.

In Analyzing Baseball Data with R Marchi and Albert consolidate this joint expertise, and have produced a book that is simultaneously interesting and useful.

The authors takes a very logical approach to the subject at hand. The first chapter concerns the three sources of baseball data that are referenced throughout the book:

- the annual summaries contained with the Lahman database,

- the play-by-play data at Retrosheet, and

- the pitch-by-pitch PITCHf/x data.

The chapter doesn't delve into R, but summarizes the contents of the three data sets, and takes a quick look at the types of questions that can be answered with each.

The reader first encounters R in the second and third chapters, titled "Introduction to R" and "Traditional Graphics". These two chapters cover many of the basic topics that a new R user needs to know, starting with installing R and RStudio, then moving on to data structures like vectors and data frames, objects, functions, and data plots. Some of the key R packages are also covered in these chapters, both functional packages like plyr and data packages, notably Lahman, the data package containing the Lahman database.

The material covered in these early chapters are things I learned early on in my own R experience, but whereas I had relied on multiple sources and an unstructured ad hoc approach, in Analyzing Baseball Data with R a newcomer to R will find the basics laid out in a straight-forward and logical progression. These chapters will most certainly help them climb the steep learning curve faced by every neophyte R user. (It is worth noting that the "Introduction to R" chapter relies heavily on a fourth source of baseball data -- the 1965 Warren Spahn Topps card, the last season of his storied career. Not all valuable data are big data.)

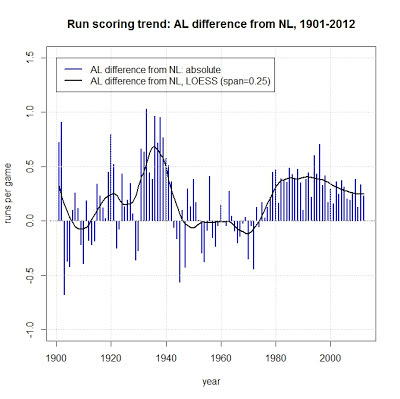

From that point on, the book tackles some of the core concepts of sabermetrics. This includes the relationship between runs and wins, run expectancy, career trajectories, and streaky performances. As the authors work through these and other topics, they weave in information about additional R functions and packages, along with statistical and analytic concepts. As one example, one chapter introduces Markov Chains in the context of using R to simulate half inning, season, and post-season outcomes.

The chapter "Exploring Streaky Performances" provides the opportunity to take a closer look at how Analyzing Baseball Data with R compares to Albert's earlier work. In this case, the chapter uses moving average and simulation methodologies, providing the data code to examine recent examples (Ichiro and Raul Ibanez). This is methodologically similar to what is described in Curve Ball, but with the addition of "here's the data and the code so you can replicate the analysis yourself". This approach differs substantially from the much more mathematical content in Albert's text Bayesian Computation with R, where the example of streaky hitters is used to explore beta functions and the laplace R function.

Woven among these later chapters are also ones that put R first, and use baseball data as the examples. A chapter devoted to the advanced graphics capabilities of the R splits time between the packages lattice and ggplot2. The examples used in this chapter include visualizations that are used to analyze variations in Justin Verlander's pitch speed.

Each chapter of the book also includes "Further Reading" and "Exercises", which provide readers with the chance to dig deeper into the topic just covered and to apply their new-found skills. The exercises are consistently interesting and often draw on previous sabermetric research. Here's a couple of examples:

- "By drawing a contour plot, compare the umpire's strike zone for left-handed and right-handed batters. Use only the rows of the data frame where the pitch type is a four-seam fastball." (Chapter 7)

- "Using [Bill] James' similarity score measure ..., find the five hitters with hitting statistics most similar to Willie Mays." (Chapter 8)

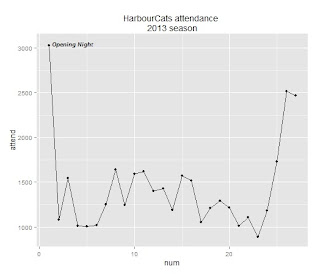

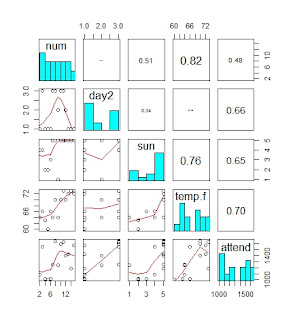

The authors have established a companion blog (http://baseballwithr.wordpress.com/), which has an expansion of the analytics presented in the book. For example, the entry from December 12, 2013 goes deeper into ggplot2 capabilities to enhance and refine charts that were described in the book.

Analyzing Baseball Data with R provides readers with an excellent introduction to both R and sabermetrics, using examples that provide nuggets of insight into baseball player and team performance. The examples are clear, the R code is well explained and easy to follow, and I found the examples consistently interesting. All told, Analyzing Baseball Data with R will be an extremely valuable addition to the practicing sabermetrician's library, and is most highly recommended.

Additional Resources

Jim Albert and Jay Bennett (2003), Curve Ball: Baseball, Statistics, and the Role of Chance in the Game (revised edition), Copernicus Books.

Jim Albert and Maria Rizzo (2011), R by Example, Springer.

Jim Albert (2009), Bayesian Computation with R (2nd edition), Springer.

An interview with Max Marchi, originally posted at MilanoRnet and also available through R-bloggers

-30-